Introducing LCMD Ecosystem:Gives Users an All-in-one Personal Cloud and AI Computing Solution

NEW YORK CITY, NY, UNITED STATES, August 26, 2025 /EINPresswire.com/ -- Cloud fatigue is real—from subscription lock-ins to privacy concerns and sluggish latency, users are increasingly disillusioned with the centralized nature of cloud storage services. Meanwhile, although the intelligence of large AI models continues to surpass expectations, what they can truly contribute to everyday life for ordinary users remains an open question.

LCMD thinks it’s time to reclaim control. Launching August 26 on Kickstarter, the company introduces the LCMD ecosystem (including a MicroServer and an AI POD) that gives users an all-in-one personal cloud and AI computing solution—built to be owned, run, and trusted locally.

The Core: LCMD MicroServer—Private Cloud Customers Actually Own

At the heart of the LCMD ecosystem is the MicroServer, a compact yet powerful home server designed to take over everything customers' cloud used to do—only faster, safer, and subscription-free.

Powered by a 13th Gen Intel® Core™ i5-13500H CPU, with up to 64 GB DDR5 memory and a 7-bay all-SSD storage array that supports up to 96TB, the MicroServer also packs comprehensive I/O—2.5 GbE LAN, dual USB-C, triple USB 3.0, HDMI 2.1, 3.5 mm audio and a DC 5525 power input—for fast connectivity and stable operation.

Key Highlights

- Advanced LZCOS design with a three-layer system ensures enhanced stability and reliability.

- Professional data security with end-to-end encryption and zero-trust hardware.

- Access to over 2000 Apps through a built-in app store, with one-click installation and no subscription fees.

- AI-Powered smart photo search, voice-controlled smart TV, multi-device real-time sync, and more.

- Seamless support for Windows, Linux, macOS, Android, iOS, and HarmonyOS.

- Supports KVM, PVE, Docker, LPK cross-compilation, Git hosting, and CI builds.

- Built-In Btrfs File System supports hourly file snapshots and efficient Copy-on-Write technology to ensure minimal data loss and fast recovery.

- Supports RAID0/1 (with RAID5/6/10 coming soon) and seamless external drive expansion, easily scaling storage capacity to 256TB, 512TB, and beyond.

With a design inspired by spacecraft modules and an intuitive onboarding experience, the MicroServer is as much a digital vault as it is an everyday productivity engine.

The Muscle: LCMD AI POD—AI Performance Without the Cloud

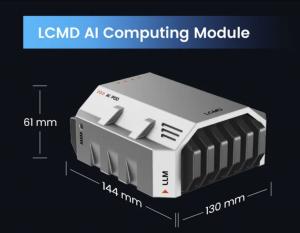

Alongside the MicroServer, LCMD introduces the AI POD, a dedicated AI computing unit designed to handle intensive local workloads with speed and efficiency.

Built on NVIDIA's Ampere architecture, the module integrates a high-performance GPU with up to 64GB of LPDDR5 GPU memory and delivers up to 275 TOPS of AI computing power. With a CPU running at up to 2.2GHz, it supports advanced tasks such as locally deploying AI models with up to 70 billion parameters, 8K video processing, on-device inference, and real-time data analysis—all while keeping operations fully local and cloud-independent.

Key Highlights (When paired with MicroServer)

- One MicroServer can manage as many AI PODs as you need, with no limit on the number.

- AI-powered knowledge management & search—Document search and key insights extraction, Semantic video search, Extract text from images (including handwritten).

- Powerful content understanding & transformation—immersive webpage translation, summarization and rewriting, quick input translation and rewriting.

- Creative generation & multimedia enhancement—text-to-image generation, text-to-video generation, Ollama Q&A, video/podcast summarization.

- Built-in AI summarizes and analyzes online search result—save up to 90% manual work.

Together, the MicroServer and AI POD form a complete local AI stack—ideal for developers, creatives, engineers, and households with rising data autonomy needs.

For those opting out of using the LCMD MicroServer Kit, you can still transform your AI POD into an AI computer. Just plug your monitor into the AI POD’s HDMI port, and connect your mouse and keyboard to its USB ports. It’s ready to use right away.

The device comes pre-installed with Ubuntu, giving users full flexibility to install AI software and customize models according to their own needs and scenarios. And if you prefer a different operating system, you can reflash the unit to swap Ubuntu out for the OS of your choice.

The Difference Between MicroServer and AI POD

Use Cases:

- MicroServer: a data and network hub for home or enterprise environments, ideal for storage, backup, remote access, and lightweight edge inference.

- AI POD: A high-performance AI computing node built for large-scale model training and inference.

Memory Type:

- MicroServer: 64GB system memory for general system use and small-to-mid-scale inference.

- AI POD: 64GB GPU momory, optimized for large-model acceleration.

Model Compatibility:

- MicroServer: Ideal for lightweight or quantized models such as LLaMA 7B, Mistral 7B, or GGUF formats.

- AI POD: Supports full-scale models up to 70B parameters.

The two products are complementary: MicroServer handles data and networking, while AI POD delivers compute power for heavy AI workloads. For example, you can build and store your personal knowledge base on MicroServer and leverage the AI POD to conduct inference and in-depth analysis on that data.

Use Cases that Go Beyond the “NAS”

While the LCMD MicroServer ecosystem might look like a NAS at first glance, its real power lies in orchestration. With built-in Docker and virtualization support, the system becomes an edge-native AI platform—capable of everything from home lab testing to media production workflows.

Whether customers are building their own GPT-style assistant, managing terabytes of family media, or creating AI-generated content at home, LCMD MicroServer ecosystem is built to keep their data (and compute) right where they can see it.

Who It’s For

- Privacy-Conscious Users: Keep sensitive files and personal archives on own devices

- Content Creators: Fast local processing for video editing, asset generation

- AI Developers: Run, test, and optimize models with full-stack access

- Power Users & Tech Enthusiasts: Build a modular, extensible local server ecosystem

Kickstarter Launch

The LCMD MicroServer and AI POD system will debut on Kickstarter starting August 26, 2025. Early backers will gain access to exclusive discounts and save up to $650. To stay informed, visit the pre-launch page: https://prelaunch.lcmd.cloud

PR

LCMD

email us here

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.